Sign Up Now

Get Free Credits

The Platform that Enables Al

Where Compute Meets Expertise

API: Simply Ship Open Models

High-quality|Advanced|Secure

Model Library

We have built an open-source model library covering all types and fields. Users can call it directly via API without

additional development or adaptation.

Instantly allocated GPU resource and

ready-to-go AI resource

End-to-End Secure Operations

Our proprietary GPU management platform offers real-time monitoring, health alerts, and resource optimization. Backed by 24/7 support, we ensure peak cluster performance and stability.

Customized Service

We provide dedicated AI infrastructure and offer full-lifecycle AI services—such as model fine-tuning and agent customization tailored to your needs—to drive enterprises toward faster, smarter, and more cost-effective growth.

Canopy Wave Private Cloud

Best GPU cluster performance in the industry. With 99.99% up-time. Have all your GPUs under the same datacenter, your workload and privacy are protected.

Pay for What You Use

Only pay wholesale prices for the AI-related resources you actually consume. No hidden fees.

NVIDIA GB200 & B200, H100, H200 GPUs

now available

NVIDIA GB200 NVL72

$9/GPU/hr

- • 18x compute trays in a rack

- • 36x Grace CPUs, 72x Blackwell GPUs

- • Up to 13.4 TB HBM3e | 576 TB/s

- • 2,592 Arm® Neoverse V2 cores

- • Up to 17 TB LPDDR5X | Up to 18.4 TB/s

Providing secure and efficient solutions for

different use cases

AI Model Training

Learn More>

Inference

Rendering

GB200 Cluster Network solution

Networking Hardware Solution

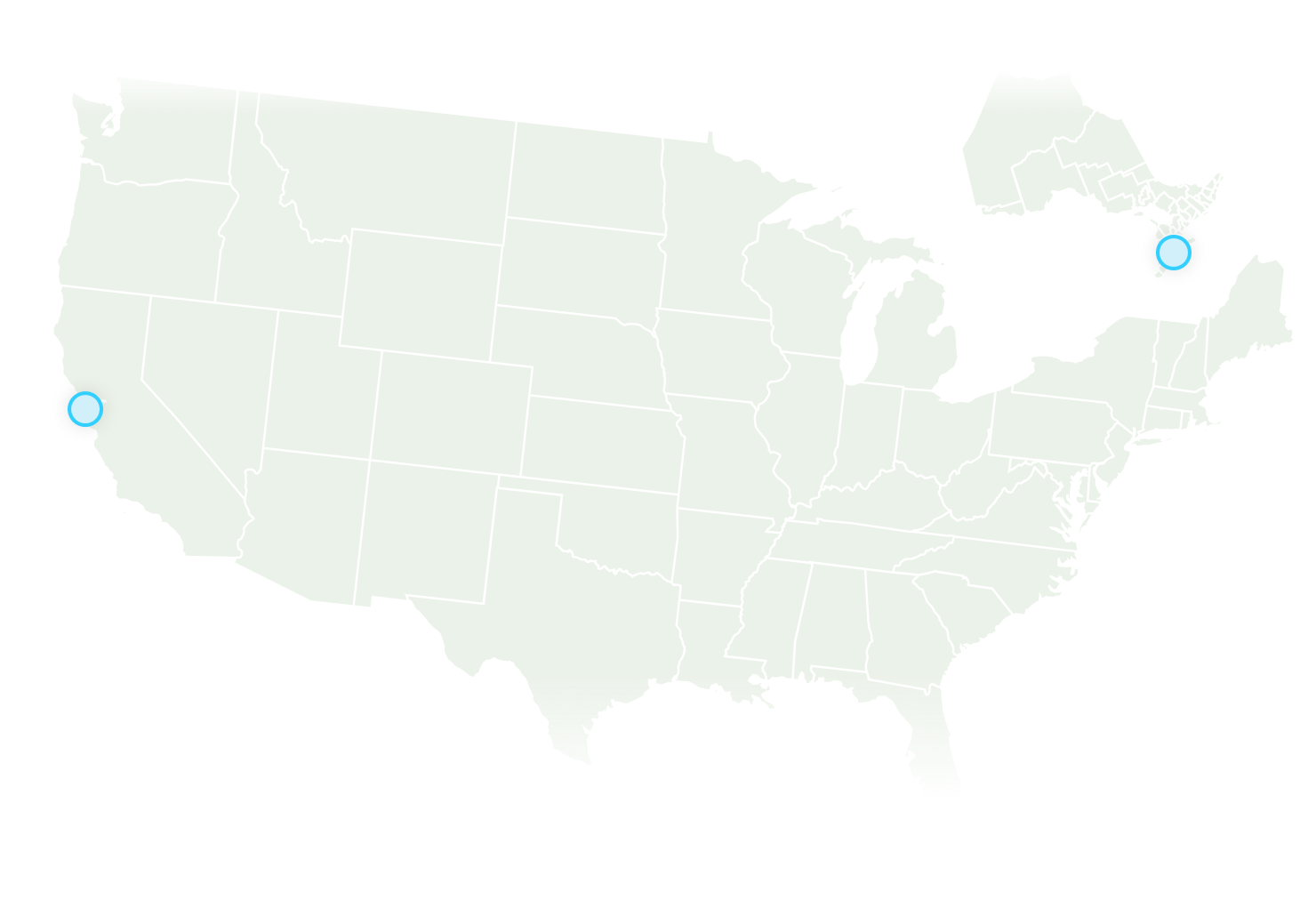

Powered By Our Global Network

Our data centers are powered by canopywave global, carrier-grade network — empowering you to reach millions of users around the globe faster than ever before, with the security and reliability only found in proprietary networks.

Explore Canopy Wave

The Rise of Enterprise AI: Trends in Inferencing and GPU Resource Planning

AI Agent Summit Keynote by James Liao @Canopy Wave

Joint Blog - Accelerate Enterprise AI

by James Liao, CTO of Canopy Wave, and Severi Tikkas, CTO of ConfidentialMind

Accelerating Protein Engineering with Canopy Wave's GPUaaS

Foundry BioSciences Case Study

How to Run the GPT-OSS Locally on a Canopy Wave VM

Step-by-step guide for local deployment

Canopy Wave GPU Cluster Hardware Product Portfolio

This portfolio outlines modular hardware components and recommended configurations